Understanding AI Agents: Definition, Differences, and Implications

Artificial Intelligence (AI) is advancing rapidly, and with it comes a variety of AI systems designed for different roles. Two common concepts are AI assistants and AI agents, which are often confused but have distinct characteristics. Moreover, the long-term goals of AI research include developing Artificial General Intelligence (AGI) and even Artificial Superintelligence (ASI). AGI and ASI are concepts that far exceed today’s AI capabilities. This page will clarify what AI agents are, how they differ from AI assistants, and how these relate to broader ideas like AGI and ASI. We’ll also touch on the significance of AI agents in the legal field (AI in the law), where AI for legal tasks must be approached with care to align with professional standards and AI laws.

What Are AI Agents?

An AI agent is essentially an AI system endowed with a degree of autonomy. Instead of waiting for step-by-step instructions for every action, an AI agent can act on its own toward a goal within certain boundaries. In technical terms, an artificial intelligence agent is a system that autonomously performs tasks by designing workflows with available tools. This means the agent can plan a series of steps or use various resources (like databases, software applications, or even other AIs) to accomplish a complex task without constant human guidance.

Key features of AI agents include the ability to make decisions, solve problems, and interact with their environment in pursuit of objectives. For example, an AI agent might be tasked with organizing your email inbox: it could sort messages, draft replies using language generation, schedule meetings by consulting your calendar, and so on. The agent does this all by itself after receiving a high-level goal. Recent experimental systems like AutoGPT and others demonstrate the concept of AI agents: you give them a goal, and they break it down into subtasks, fetching information and taking actions iteratively until the goal is achieved. These agents leverage advanced AI models (often large language models) to understand instructions and determine what actions to take next.

It’s important to note that AI agents today are typically specialized. They excel at specific kinds of tasks or operate within particular domains. In essence, they belong to what the field calls narrow AI (or Artificial Narrow Intelligence), meaning they do not possess general intelligence beyond their programming. They are powerful at what they do, but they are not human-level minds. To understand that context, let’s contrast AI agents with the more familiar AI assistants, and then look at where AGI and ASI come into play.

AI Assistants vs. AI Agents

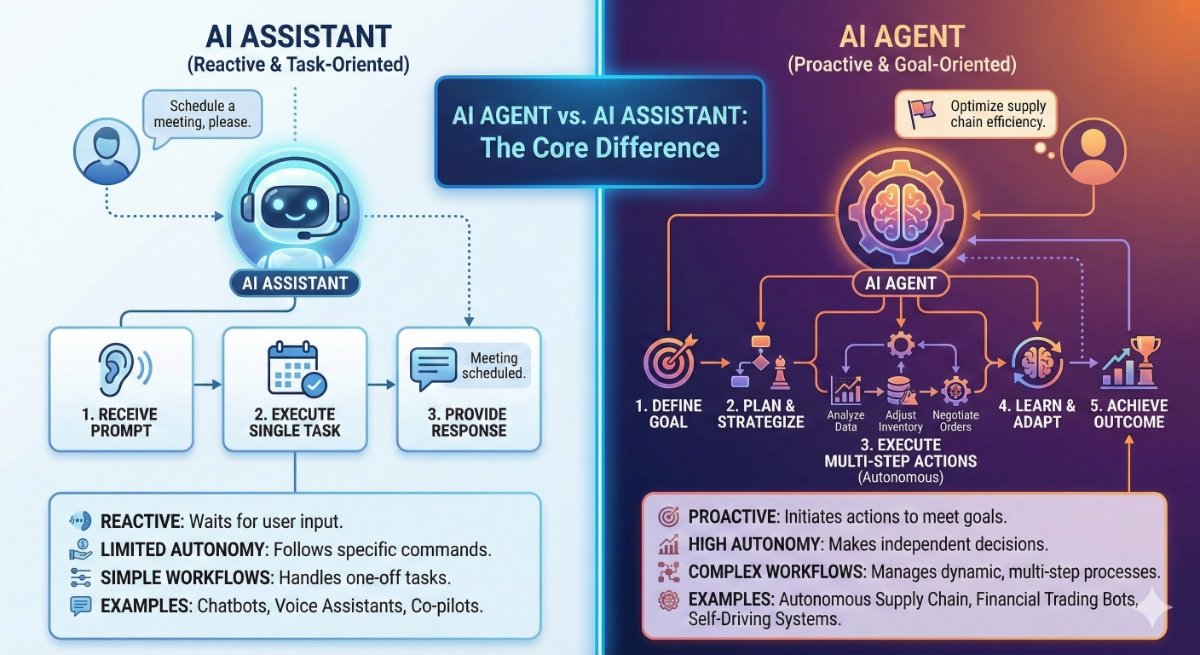

People often use AI assistants in daily life. For example, think of Siri, Alexa, or Google Assistant. These are AI assistants: tools that help users by performing tasks reactively in response to explicit commands or questions. AI agents, by contrast, are more proactive. The fundamental difference can be summed up simply: AI assistants are reactive, executing straightforward tasks based on specific user commands, whereas AI agents are proactive problem-solvers capable of autonomously setting and achieving goals.

In practice, an AI assistant waits for you to prompt it. For example, you ask Siri to set a reminder or find a document, and it will comply within that narrow request. It won’t do anything on its own beyond that. Its scope is tightly defined by what it’s programmed or prompted to do. AI assistants are incredibly useful for handling routine or single-step tasks (like fetching facts, scheduling an appointment, or answering FAQs). They are designed to simplify user interaction with technology, essentially serving as a convenient interface between the user and various services.

AI agents, on the other hand, can take a broader mandate. They don’t just respond; they take initiative within their remit. If you tell an AI agent, “Help me manage my schedule and correspondence,” a sufficiently advanced agent could analyze your to-do list, send emails on your behalf, organize meetings, and continue to monitor for new tasks. An AI agent can do all of this with minimal further input. In other words, an AI agent can autonomously plan a multi-step solution to meet a goal you’ve given, adapting to new information along the way. It’s goal-oriented and can handle complex, multi-step workflows independently. This requires the agent to have some persistence (memory of what it’s done or learned) and the ability to call on tools or data sources as needed.

To illustrate the difference: if a law firm uses an AI assistant, a lawyer might ask it a question (“Find me cases about X in Illinois in 2020”) and the assistant will return an answer or list of results. If the firm uses an AI agent, the lawyer could assign a high-level task (“Review these case files and draft a summary memo”), and the agent would autonomously go through documents, pull out key points, maybe even retrieve additional references, and then produce a draft memo for review – all without the lawyer having to guide each step. The assistant is like a skilled clerk you ask for specific help; the agent is more like a junior associate who can be given an objective and will figure out the steps to get there (albeit within a limited scope of ability). This is the comparison made in Infinite Counsel. The book discusses this issue in greater detail.

In summary, AI assistants are user-driven and act as supportive tools that require human prompts, whereas AI agents are goal-driven and act as independent agents that can carry out tasks with greater autonomy. The rise of AI agents represents a move toward systems that adapt and learn over time, rather than just perform pre-defined actions.

Bullet-point recap:

AI Assistants are Reactive helpers. They wait for commands and perform defined tasks in response. Great at handling specific queries or actions (e.g., answering a question, turning on lights via voice command). They do not initiate action on their own and won’t stray outside their programmed scope.

AI Agents are Proactive achievers. They take goals and figure out the tasks needed to reach those goals, often making decisions along the way. They can adapt to new information and can operate through multi-step processes autonomously to accomplish complex objectives. Once set in motion, an AI agent needs minimal intervention to continue its workflow.

AGI and ASI: General and Super Intelligence

Where do Artificial General Intelligence (AGI) and Artificial Superintelligence (ASI) fit in? These terms represent the aspirational end of the AI spectrum. AGI and ASI go far beyond the narrow capabilities of today’s AI assistants or agents.

Artificial General Intelligence (AGI) refers to an AI that possesses a broad, human-like intellectual capability. In other words, AGI would be an AI system with the ability to understand, learn, and apply knowledge to perform any intellectual task that a human can do. Such an AI could switch between different contexts and tasks with the flexibility and understanding that we humans take for granted. Importantly, no true AGI exists yet. For now, the AI we have today (including the most advanced chatbots and agents) are specialized to some extent. They might excel in language, or vision, or driving, but they are not generally intelligent across all domains. Researchers worldwide are pursuing AGI, but we are still far from achieving true general AI in practice. Current AI agents, while more advanced than simple assistants, are not AGI; they cannot genuinely understand or learn anything beyond their training and programming. They remain narrow in scope even if they string together tasks.

Artificial Superintelligence (ASI) goes a step beyond AGI. ASI is a hypothetical concept of AI that not only matches human intelligence across every domain but vastly surpasses it. An ASI would have an intellect that is to humans as ours is to animals. ASI might even have “intellectual scope beyond human intelligence” altogether. Such a superintelligent AI could devise solutions and understand complexities in ways we might not even fathom. By definition, ASI is only theoretical at this point. Experts debate if and when it might ever be achieved. It represents the idea of AI reaching a technological singularity where it can improve itself and outthink humanity by orders of magnitude. In short, ASI would outperform the brightest human minds in virtually every field, from science and engineering to art and social understanding, and do so with speed and precision far beyond our capabilities.

To put these terms in context: today’s “AI agents” are not AGI or ASI. They are sophisticated narrow AIs. Some see the development of more advanced agents as stepping stones toward AGI. For instance, autonomous agents that learn and self-correct could, in theory, be components in building a general intelligence. But there is a vast gap between even the smartest AI agent and a true general intelligence. AGI would require breakthroughs enabling an AI to have the versatility and depth of cognition of a human brain (learning any subject, dealing with unexpected problems, perhaps possessing common sense reasoning). ASI is an even more distant concept, essentially an AI that could reinvent technology and knowledge at will far beyond human pace. For now, these two terms remain goals or thought experiments rather than realities. It’s important not to conflate marketing terms with these scientific concepts: calling a product an “AI agent” doesn’t mean it has anything close to genuine general intelligence. Instead, the term simply denotes a degree of autonomy in a specialized task.

AI Agents in the Law: Opportunities and Considerations

AI agents have promising applications in many industries, and the legal field is no exception. AI in the law practice typically means using AI tools to handle tasks like legal research, document review, contract analysis, drafting emails or briefs, and even predicting case outcomes based on data. An AI agent in a law firm could, for example, autonomously sift through thousands of documents in discovery, categorize them, and flag the ones most relevant to a case. Agents do this all before a human ever reads them. It might also handle routine duties like filling out forms or scheduling client meetings by interpreting the lawyer’s calendar and email communications. In essence, such an agent acts as a tireless junior assistant, working 24/7 on procedural or informational tasks.

However, the intersection of artificial intelligence and law raises special concerns. Lawyers operate under strict ethical and professional standards. Any AI for legal use must comply with confidentiality, accuracy, and accountability requirements. In fact, attorneys have a professional duty of technological competence: in places like Illinois, lawyers are explicitly required to understand relevant technology as part of their obligation to provide competent representation. This means legal professionals must understand the AI tools (including AI agents) that they use, to ensure they are used correctly and safely.

Perhaps the most important point is that even the smartest AI agent does not replace the need for human judgment in law. Legal artificial intelligence still requires competent oversight from a practiced attorney. An AI agent might draft a contract or analyze a set of cases, but a human lawyer must review its work, confirm its accuracy, and ensure it aligns with legal strategy and ethics. The lawyer remains responsible for the outcome. AI agents can sometimes make mistakes, for example, misinterpreting a nuance in a case or overlooking a crucial piece of evidence if it falls outside their programmed parameters. Therefore, any use of AI agents in law should be “properly supervised” to avoid compromising integrity or client trust. In practical terms, this might involve protocols like: double-checking AI-generated documents, maintaining confidentiality by controlling what data the AI has access to, and staying updated on emerging AI laws and regulations that govern AI use (which are evolving as governments respond to the rise of AI in society).

When implemented carefully, AI agents can be a boon to legal practitioners. They can automate repetitive tasks, e.g., scanning and organizing discovery documents or populating forms, thereby freeing up attorneys to focus on higher-level analytical work. In a sense, this reflects the ideal scenario: lawyers delegate the drudgery to machines while concentrating on strategy, client counseling, and advocacy. Indeed, one of the promises of AI in law is to increase efficiency without sacrificing quality, as long as ethical and operational controls remain in place. Firms adopting AI agents should establish clear guidelines (e.g., requiring human review of any AI-generated output before it goes to a client or court) and ensure data privacy is maintained.

AI and law now go hand-in-hand in modern practice: forward-thinking firms use AI agents to gain a competitive edge, but they do so within a framework that respects legal ethics and emerging AI laws. By understanding the technology’s limitations and capabilities, lawyers can harness AI agents as powerful assistants rather than view them as black boxes. The goal is to enjoy the efficiency benefits of AI agents, things like faster document processing and data-driven insights, without surrendering control over the critical judgments that only human legal training can provide.

AI agents represent an exciting evolution in artificial intelligence. AI agents move from simple assistants that wait for instructions to more autonomous systems that can pursue goals. They stand distinct from AI assistants in their proactivity and independence. Yet, it’s crucial to remember that today’s agents are still limited, narrow AI systems, not the sci-fi sentient AIs of movies. Concepts like AGI (a human-level thinking machine) and ASI (a beyond-human superintelligence) remain largely theoretical at present, and AI agents are not equivalent to these advanced intelligences.

For professionals and businesses, including those in the legal industry, understanding the difference between an AI assistant and an AI agent is more than just terminology. Understanding this distinction is about knowing what level of autonomy and capability you are handing to a machine. Use AI agents where they make sense, but always with appropriate oversight and clear objectives. AI in the law must be handled with diligence: artificial intelligence and law can complement each other if implemented responsibly. By mastering these concepts, one can embrace AI innovation confidently, leveraging assistants and agents for efficiency while preparing for the future as AGI and ASI loom on the horizon. The key is to integrate AI tools in a way that augments human expertise and adheres to our legal and ethical standards.